Introduction

Support Vector Machines (SVM) have become a fundamental tool in the realm of machine learning and data science. Renowned for their effectiveness in both classification and regression tasks, SVMs offer a robust approach to analyzing and interpreting complex datasets. In this blog post, we will delve into the inner workings of SVMs, explore their key concepts, and understand how they can be applied to solve real-world problems.

What is a Support Vector Machine (SVM)?

A Support Vector Machine is a supervised learning algorithm that analyzes data for classification and regression analysis. It’s a powerful tool for both linear and nonlinear classification, regression, and outlier detection tasks. SVMs are particularly useful in high-dimensional spaces, making them suitable for a wide range of applications, from text classification and image recognition to bioinformatics and finance.

How does SVM Work?

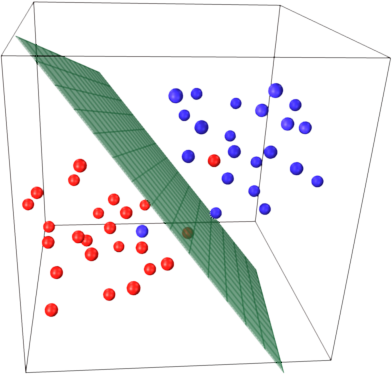

At its core, an SVM algorithm works by finding the optimal hyperplane that best separates different classes in a dataset. This hyperplane is positioned in such a way that it maximizes the margin, or the distance between the hyperplane and the nearest data points from each class, hence the term “support vectors.” By maximizing the margin, SVMs aim to achieve better generalization performance and improve the algorithm’s ability to classify unseen data accurately.

Key Concepts of SVM:

- Hyperplane: In SVM, a hyperplane is a decision boundary that separates data points into different classes. For a binary classification problem, the hyperplane is defined as the line that maximizes the margin between the two classes.

- Support Vectors: Support vectors are the data points closest to the hyperplane, and they play a crucial role in determining the optimal hyperplane’s position. These vectors are used to define the margin and adjust the hyperplane to achieve better classification performance.

- Kernel Trick: SVMs can efficiently handle nonlinear classification tasks by employing the kernel trick. This technique involves mapping the original feature space into a higher-dimensional space, where the data becomes linearly separable. Common kernel functions include linear, polynomial, radial basis function (RBF), and sigmoid kernels.

Applications of SVM:

- Text Classification: SVMs are widely used in natural language processing tasks such as text classification, sentiment analysis, and document categorization. They can effectively classify text documents into different categories based on their content.

- Image Recognition: SVMs play a vital role in image recognition and object detection tasks. They can classify images into different classes, detect objects within images, and even recognize handwritten digits in optical character recognition (OCR) systems.

- Bioinformatics: SVMs are utilized in bioinformatics for tasks such as protein classification, gene expression analysis, and disease diagnosis. They can analyze complex biological data and extract meaningful patterns to aid in medical research and healthcare applications.

Conclusion

Support Vector Machines (SVM) are a versatile and powerful tool in the field of machine learning. By leveraging the concept of maximum margin hyperplanes, SVMs can effectively classify data, handle nonlinear relationships, and generalize well to unseen samples. Whether it’s text classification, image recognition, or bioinformatics, SVMs continue to be at the forefront of cutting-edge research and real-world applications. As we continue to advance in the era of artificial intelligence and data-driven decision-making, understanding and mastering SVMs will undoubtedly remain a valuable skill for data scientists and machine learning practitioners alike.

Leave a comment